The first three days of the ICHEP conference have consisted entirely of parallel sessions on a variety of topics. Heavy quark physics, supersymmetry, CP violation and rare decays, exotic physics, computing and data analysis, particle astrophysics – too much for any one person to see! I did my fair share of running around. Here are some of my favorite moments.

As an Italian, please speak for DAMA

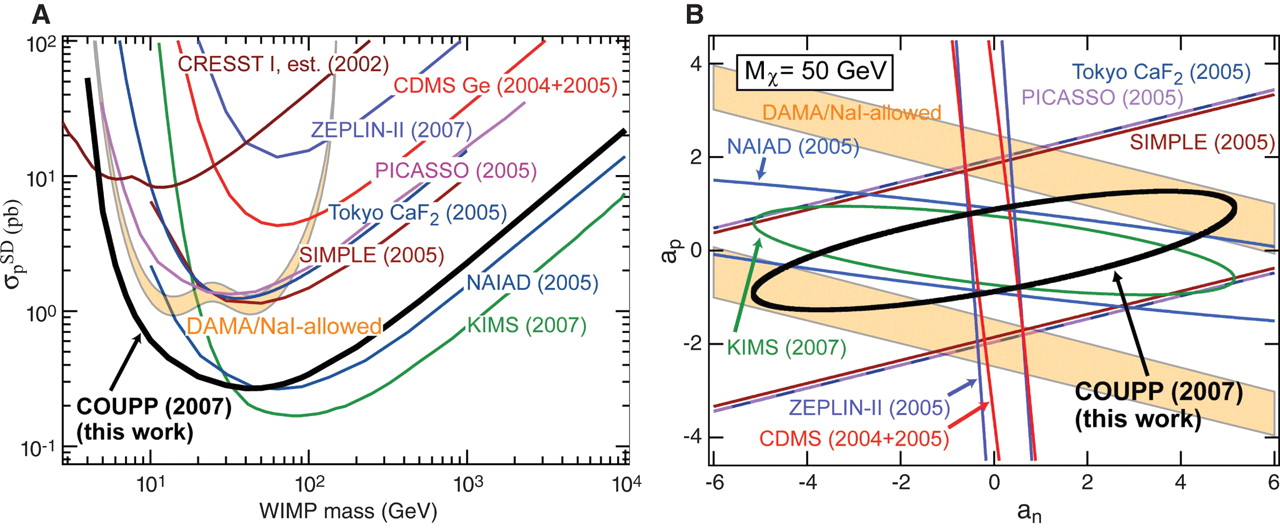

I attended several of the talks at the particle astrophysics session. One of the sessions began with a focus on dark matter searches, leading with the liquid noble technologies followed by CDMS. The liquid noble speaker was a physicist of Italian origin who reviewed the current results from this rapidly popularizing technology. He discussed the challenges to the technology, and speculated that if all its current problems can be overcome it can scale faster than any other and is an excellent contender in the search for dark matter.

After the talk, one of the questioners from the audience made the following statement, which I paraphrase. “You said that these experiments are designed to find dark matter, but hasn’t dark matter already been found? After all, the DAMA experiment has reported a signal consistent with dark matter. Why did you not comment on this result? As an Italian, shouldn’t you be speaking on their behalf?”

This led to some chuckling (a flush of anger from me) and then a lot of arguing. The speaker diplomatically addressed the question, and then offered his personal opinion of just one example problem the DAMA collaboration needs to address. He also refused to act as a spokesperson for the diverse experiments based in his home country, nor to take the nationalism bait laid out by the questioner. A woman in the audience demanded the speaker explain how his criticism of DAMA could lead to a time-dependent signal of the type they observe. He said he didn’t know a way, but nonetheless it was one valid physics question that DAMA should address. The moderator, a well-known physicist from Fermilab, quelled the upheaval in the audience and commented on the liveliness of the forum.

From my own perspective, it’s unfair to treat an Italian physicist like a spokesperson or apologist for experiments in Italy, just as it’s unfair to ask an American to explain the failure to reliably fund science in this country. Scapegoating an entire nation’s scientists for one experiment, whether that experiment is right or not, seems a fairly primitive practice in an age when people should be enlightened enough to separate the prejudices against a national stereotype from the scientific question of whether a result is thoroughly vetted or cross-checked. I’d invite the questioner at this forum to propose a means of funding an experiment designed to directly check the DAMA result.

I like the leptonic when it’s semi-leptonic

My graduate thesis reported on my efforts to measure the rare decay of a B meson decaying into a tau lepton and a tau neutrino. This process, expected to happen in about one out of every 10,000 B decays, seems none-too-rare when you start with a sample of 41 million such decays. However, the tau is a tricky lepton – it’s short-lived in our detector and produces at least one neutrino when it decays. This makes the signature of this rare decay prone to be faked by many different kinds of backgrounds with much higher rates.

There were painful lessons in that work I did in 2002-2004. I learned that you have to have a suite of cross-checks to double and triple check your understanding of those nasty background processes. I learned that even the best simulations in the world can fail to simulate whole classes of background, or get their physics just wrong enough to cause trouble. I’ve worked on this search using samples of 41 million charged Bs and 193 million charged Bs, and a student in my group is pursuing this search for his own thesis using a sample of about 220 million charged Bs. The consequence of failing to respect, and even fear, these backgrounds is that they might artificially push up your observed rate of this rare decay.

When Belle presented their latest search for this rare process, they did so while unveiling their use of a technique developed by another student and colleague of mine from U.W. Madison. For her thesis, she required only the cleanest, high efficiency semi-leptonic B decays to “tag” the event as a B-decay event. The second B in each event is then constrained to see if it’s consistent with the rare process. This still leaves a lot of background, which you have to take care to study. Belle’s speaker was a younger member of the collaboration, and it wasn’t clear that he’d been prepared for the difficult issues of background cross-checks when he gave his talk. Several of us on BaBar who have worked on this technique for almost a decade pelted him with questions about cross-checks and background studies. His responses weren’t reassuring.

It’s a reminder to everybody that before you get too excited about a result, you have to think, think, think and check, check, check. Belle might be totally right, but their results were fishy, and fishy in a way that’s consistent with things we on BaBar have long learned to fear and respect.

Enough with the model building, enough with the expectations

I’m sure that the LHC representatives will present the status of the LHC in their plenary talks. People have told me that the superconducting LHC magnets are all cold and that beams could start entering the ring in just a few weeks. I was a bit surprised that there were a ton of LHC speculation talks – how well they’ll do on searches for supersymmetry, the Higgs, top quark measurements, etc. – that were delivered with a conviction that should only come from actually using data. They don’t have data, of course – they are not running yet. I was annoyed that these speakers deliver their simulation results with such conviction.

Taking as gospel simulations ahead of data, especially at an energy where NO machine has ever operated, is foolish. I, personally, would have liked to seen more humilty ahead of operations, more statements about the technical progress on the big experiments and the hard decisions that have been made to get certain physics channels while sacrificing others. Statements like “I assure you that we can achieve this sensivity in one year of data” are only believable when you already have 2 years of data behind you.

And as for theorists, there is so much model building going on. One theory colleague of mine summarized it this way: everybody wants to push their models out into papers before LHC turns on, since they either run the risk of having their idea made obsolete by LHC data before they can sell it, or stand to take credit if their special model of nature is right.

I get it. Don’t get me wrong. But it feels like noise.

Data is all that matters right now, because we’re not making much progress just thinking about these problems.

What’s the ILC?

One last note. There hasn’t been a single talk about a future lepton collider – at least, none that I have seen. I felt this most deeply when, at the particle astrophysics session on dark matter, an audience member asked a speaker the following question: “If we discover dark matter, how are we going to measure its quantum numbers?”

The answer is, of course, a lepton collider. If dark matter is discovered in a manner consistent with a WIMP interaction, then lepton colliders at sufficient energies can make lots of it.

Nobody in the audience said anything in response to this question. The speaker didn’t really have a good answer.