In my last post, I started by complaining about the way in which information is not cited in news articles. As I got caught up in the actual reference for the Times article on the new Splenda study, I completely stopped caring about my original complaint. Instead, I found some fascinating little things in this paper that really bear scrutiny.

The paper is available in the online version of Journal of Toxicology and Environmental Health [1]. I suspect that most of you will not have access to this article, but that’s the way science publishing works. I’ll try to give enough details about the study here so that you can follow my concerns.

The first page or so of the paper lays out the history of Splenda and the experimental methods of the study. Five groups of 10 male rats each were used in the study. Each group was fed the same diet for one week prior to the start of the study. The groups were then given the following feeding “assignments”: a control group, getting water in their water feeder, and four experimental groups receiving 100 mg/kg, 300 mg/kg, 500mg/kg, and 1000 mg/kg of Splenda in their water.

No mention of a double-blind approach is made; that is, if I had conducted this study I would have arranged the feeding bottles in such a way that a computer knew (via a barcode) which were control and experimental samples. Measurements would be made, and only at the end would the correlation between group and feeder contents would be revealed. This prevents researchers from unconsciously affecting the outcome, for instance by treating mice getting Splenda even slightly differently than those getting water.

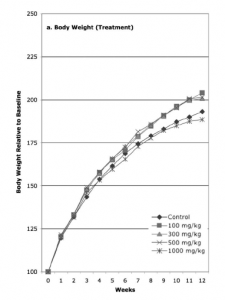

Setting aside the blinding issue for a second, my first piece of confusion came from Fig. 1 in the study. Fig. 1 shows the percent gain in weight, per group, as a function of the 12 weeks they were receiving water or splenda-water. I reproduce this plot below.

Reading this plot already presents a few annoying things. First of all, no attempt was made to quantify error in the weight measurement. For instance, weight naturally fluctuates over the course of the day, and I think they could have assigned an error based on the variation of weight over the course of one day, each day, during the week prior to the start of the study. Actually, they should have let the rats reach a weight equilibrium based on their diet, then measured the variation. Second, it’s clear the experimenters didn’t wait long enough for the rats’ weight to hit equilibrium before starting the study, since even the control gains significant weight (nearly doubling in weight).

The weight increases slightly faster over the control in the 100, 300, and 500 mg/kg groups, but NOT in 1000 mg/kg group, where they actually appear to have slightly less weight gain. This data is confusing.

What’s even more confusing is the authors’ interpretation of their data. Quoting from the paper:

At the lowest Splenda dose level of 100 mg/kg, rats showed a significant increase in body weight gain relative to controls; the changes at 300 mg/kg, 500 mg/kg, and 1000 mg/kg did not differ significantly from controls.

What? My reading of the results from this graph are that 3/4 experimental groups exhibited slight weight gain over control – in fact, their percent weight gain were nearly IDENTICAL to one another over control. The largest dose (fourth group) showed no gain or even a slight weight loss relative to control. I’d love to get a second opinion on this conclusion.

Now I’m really skeptical at this point in the paper. Based on the easiest-to-interpret part of their study, they’ve drawn what seems a completely false conclusion from their data. So now, as a scientist, I have to ask: “If they can’t interpret the weight data, how can they interpret the chemical and bacteriological analysis data?”

By studying the feces, the researchers can count the number of colony forming units (CFU) for gut bacteria. They do not show data from the 7 days prior to the start of the study, when they were starting the rats’ diet. We see only the data from the 12 experimental and 12 recovery weeks. The average of the CFU/gm(x10^9) for the groups drops relative to control in all Splenda-consuming groups. The change in the count ranges between a factor of 3-5, depending on the kind of bacteria being studied. The drop magnitude largely correlates with the Splenda dose – more Splenda, more drop.

One question occurred to me here: is it the sucralose, or something else, doing the work of dropping the bacterial count? For instance, if I could do a study like this I would:

- Add a group given SUGAR in the water

- Add a group given all the ingredients in Splenda EXCEPT sucralose, including Maltodextrin (93.59%), glucose (1.08%), and moisture (4.23%). You can see that sucralose accounts for just 1.1% of Splenda! How can we be sure it’s the cause?

I also found fascinating that in the 12 week recovery period, the control groups’ gut bacteria levels largely PLUMMETED (by almost a factor of 2 in many cases), while the other groups decreased a little, flattened out, or rose. Again, this is confusing data! After 24 weeks, the control group fares almost as badly as the experimental groups!

Based on these big ticket items – weight gain and beneficial bacterial changes – and the very confusing data, I am surprised the researchers drew any strong conclusions. I would warn the press to not jump on this – wait until either the medical research community has a chance to reproduce this, or criticize the work (encouraging the researchers to follow-up their paper). But don’t just read the abstract and accept the conclusions. Read the paper and look at the data.

Data is GOD.

[1] Splenda Alters Gut Microflora and Increases Intestinal P-Glycoprotein and Cytochrome P-450 in Male Rats (Mohamed B. Abou-Donia et al., “Splenda Alters Gut Microflora and Increases Intestinal P-Glycoprotein and Cytochrome P-450 in Male Rats,” Journal of Toxicology and Environmental Health, Part A 71, no. 21 (2008): 1415, doi:10.1080/15287390802328630.)

6 thoughts on “Data analysis: the new Splenda study”

Thank you for your analysis.

As we use Splenda the NYT article was of interest.

Your article was the only one I could find with a critical analysis – yes I cannot access the original without payment, etc.

As a retired medical doctor I appreciate your dissection (and demolition) of the methodology, the data, and especially the unproved conclusions.

Obviously another “made to order” article which should not have had publication in any scientific journal that has proper review policies.

Particle physics, my chosen field of research, is very different from medical research. However, since one of these involves quality of life, and many times questions of life-or-death, I become gravely concerned when research approaches are applied which appear less rigorous than those used in physics. While the subatomic world can be quite forgiving, the lives of our fellow humans are not a thing to take lightly. Many people depend on Splenda to provide some character to their food, and a question of its ill effects on the body should never be taken too lightly, nor the work to answer the question riddled with holes obvious even to a particle physicist.

I very much hope that, more than demolishing this work, I have made the point that this work bears scrutiny and repeating, by researchers with the tools and training capable of handling biological research. When I learned about the process of science, I learned that a fundamental part of that is reproducibility of the result. I am eager to see this study repeated by an independent lab (non-Duke, different funding). I am also hopeful that the researchers themselves will be encouraged to revisit this study from scratch, and see if even they can reproduce these results.

Sadly, you have ignored most important and best documented finding, the clear, dose-dependent induction of two intestinal cytochromes, Cyp3A4 and Cyp2D1. The dogma is that sucralose is not metabolized. Here the authors have shown very convincing Western blots that demonstrate induction and recovery of two different cytochromes and some interesting findings on P-glycoprotein. They probably need to repeat the P-gp results before they try to explain them.

The cytochrome results are extremely important. If the findings are reproduced, it suggests that eating a lot of Splenda (it would diet sodas all day and baked goods to hit 5 mg/kg sucralsose) could alter the bioavailabity of some prescription medications.

Thanks for posting this comment, ET. I agree with you – I would very much like to see any or all of this reproduced by another group of analysts. Their procedure is certainly documented well enough that this is possible, funding permitting.

Regarding my avoidance of the cytochromes results, I chose to focus on things where obvious questions jumped out at me. Take my lack of mention of these results as a positive thing for the paper. However, if 2/3 of a paper leaves a lot of questions about methodology and interpretation wide open, it’s hard for me to then extrapolate my professional trust to the remaining 1/3, even though it might be perfectly accurate work.

It boils down to verification. Since I’m not tied into this community, I rely on hearing about such things (verification papers) from external sources, including TV news (which is how I heard about this paper in the first place). You sound quite knowledgeable about this material; if you know of a follow-up paper, even by the same authors, I’d appreciate hearing about it.

Good analysis of this paper.

The authors of this paper used an inappropriate statistical analysis of the data, which is why they probably found a significant increase in body weight in the 1.1 mg/kg group, but not the others, despite a similar magnitude of increase.

The problem is that the authors did not adjust for multiple comparisons in their statistical analysis. They compared multiple treatment groups to a control. This is 4 comparisons to a control group. If we assume the 0.05 level for statistical significance, the chance of having a false positive increases to 0.05 x 4 = 0.20. Thus, their “significant” increase was likely a chance finding.

I also don’t see any determination of whether their data followed a normal distribution (which is the assumption of ANOVAs and t-tests). If their data violated these assumptions, then their analysis was also inappropriate.

What do those doses convert to in, say, a 150 pound person?