I took a break this afternoon, met up with Jodi and her colleagues, and went out for coffee. While sitting outside, sipping our drinks, one of the students pulled out his mobile phone and showed a picture to Jodi. He said that he was reminded to show the photo since we were sitting right by the site where he took it. And what was in the picture? The carefully sculpted grapevines in the photo looked EXACTLY like a mathematical object known as a “binary-split decision tree”. I took some pictures of this as well; the one at the left shows you how the trunk (representing the data) comes in from the bottom, splits in two directions (a binary split), and then each of those branches splits into to more. The leaves at the end of the last branch are the terminal nodes of the decision tree. In physics, these structures are used to combine many variables into a complex algorithm for deciding what data to keep, and what to throw away. Terminal nodes of the tree contain either signal-like data (keep) or background-like data (throw away).

I took a break this afternoon, met up with Jodi and her colleagues, and went out for coffee. While sitting outside, sipping our drinks, one of the students pulled out his mobile phone and showed a picture to Jodi. He said that he was reminded to show the photo since we were sitting right by the site where he took it. And what was in the picture? The carefully sculpted grapevines in the photo looked EXACTLY like a mathematical object known as a “binary-split decision tree”. I took some pictures of this as well; the one at the left shows you how the trunk (representing the data) comes in from the bottom, splits in two directions (a binary split), and then each of those branches splits into to more. The leaves at the end of the last branch are the terminal nodes of the decision tree. In physics, these structures are used to combine many variables into a complex algorithm for deciding what data to keep, and what to throw away. Terminal nodes of the tree contain either signal-like data (keep) or background-like data (throw away).

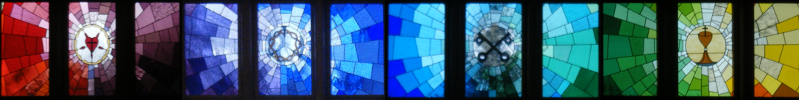

This one grapevine was part of a sequence of carefully scuplted vines, each resembling a binary-split decision tree. The result? A Random Forest [1].

[1] L. Breiman, Machine Learning 45, 5 (2001)